Database Relevancy - Records Verification (Data Controller Side)

My database has millions of records!

My database has millions of records!

You can always find people boasting about size of their mailing lists or number of emails thay recently obtained from the data broker.

Let them boast and do your thing.

Ask yourself the following question:

Do I prefer to send email to the database of 100K records and receive 1K of semi-relevant to my processes actions, face complains (that hurt my email infrastructure performance) and "right to be forgotten" requests (GDPR individuals rights); or do I prefer to send an email to 10,000 records and receive 1,000 relevant actions?

CASE STUDY:

This is a short story about a UK company working in the lucrative market sector with wealthy individuals from aroud the world. After about 10 years of expanding its database of customers the company reached the stage where it become important to keep quality of communication to the highest level. Their decision was to rebrand and they needed customers to confirm they understand the changes that are happening. The plan has been created and the execution started. Initially we looked back in to the historic data. This allowed us to group customers in the database. We started comunication in cerfully created batches. We implemented diffrent messages based on behavioural data. In the process we identified 3000 emails that were suspicious. Legitimately looking records with all of them responding in a correct way from the technical point of view (existing contact detils) we realised these are however "empty" records.

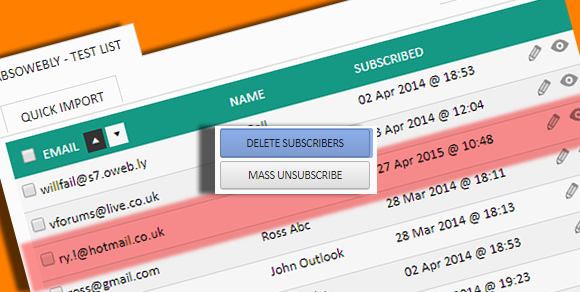

Costs of the sending aside, this 3000 emails has damaged all stats in campaigns reports from the past. While deliverability rate was close to 100% at this same time opening and click-through rates constantly showed 0% on that 3000 records. We had a difficulty establishing how to deal with these as we were not sure about the extent of this "ghost" part of the database (due to the character of the product the time between activity on valid records sometimes spanned over 6-9 months). We had to send further six months of data to identify this group as accurately as possible. To enhance our chances, we grouped suspects and sent a series of client-side verification the double opt-in email. After that exercise, we knew an exact size of our "ghost" DB.

Several years later and this same company that implemented long-term sanitising processes for their contact database on the back end through data verification and purging uses about 10000 highly qualified records that allow a small company to produce +£1m turnover with profits well in the six digits.

The conclusion from this research is simple. It is good to get rid of irrelevant data. It is not important how big is your database but how responsive are individuals/companies in this database.

To keep your existing records relevant, there are a couple of simple exercises you can do:

- Make sure you are deep analysing your email campaign data for patterns,

- Try to address the typical issues with data quality input - if your data comes from the online form maybe someone typed wrongly .com as .con, or you have some other misspelling or mistyping,

- Verify data through simple domain checks (does domain name exist, is there a chance to establish a connection with a mail server on that domain etc.),

- Go to your business data - maybe your client was taken over by another company, and their emails have changed.

- Make sure your forms on your website as well as on the third party websites collect relevant data and display relevant privacy-policy information.

When verifying data think on different levels - technical, business-strategic, operational

3 Points to Take Away

1) Review activity of your database - contacts that did not open your messages for a year are not worth to send your messages at all!

You can always verifiy them by sending a double opt-in verification email

2) Try to verify data on a regular basis and make sure your online forms are correctly set up for even basic email verification formats

3) If you have a legitimate way of obtaining large datasets of relevant people (trade shows, exhibitions and trade organisations partnerships) make sure you are exposing data from these sources to the verification process